Inspired by a fellow YMH podcast (warning NSFW) fan/mommy, Eric, I am trying my hand at analyzing YMH podcast transcripts. I plan on following along with Eric’s work flow below and diverting once I find an opportunity to personalize the analysis - aka keeping it high and tight and following proto.

Based on the structure of Eric’s blog post, this analysis will proceed as follows:

- Load R libraries

- Test API call

- Generate YMH URL list

- Data cleaning

- List API call

- Finalize API call output

I don’t have any experience in pulling transcripts from YouTube or any serious text analysis so I’m excited to try it out. Thanks for not being stingy, Mark…. I mean Eric!

Libraries

Aside from {tidyverse}, {reshape2}, and {lubridate}, Eric uses {youtubecaption} for calling YouTube’s API to get the caption transcripts and {janitor} for some of the text analysis, namely the clean_names() function. These two packages are brand new to me so I’m excited to work with them.

Note: I learned further into this analysis that {youtubecaption} relies on Anaconda, a comprehensive Python environment, to pull from YouTube’s API. The author of youtubecaption states the reason for this is due to the difficulty of calling YouTube’s API for the captain data in a user friendly format. Thus, this library acts as a handy and clean way of accessing YouTube’s API in Python (Anaconda) without needing to write a custom API call in another language. I didn’t have Anaconda on this machine so I had to take a break from the analysis to install Anaconda before running functions from that library. Save yourself some time and download it before getting started!

Test YouTube API call, episode 571

ymh_e571 <- get_caption("https://www.youtube.com/watch?v=dY818rfsJkk")

And there we go, a really straight forward way to download a transcript from a specific YouTube video. I can see why Eric used this package and that’s the easiest API call I’ve ever used. Let’s take a look at what we are working with:

head(ymh_e571)

# A tibble: 6 x 5

segment_id text start duration vid

<int> <chr> <dbl> <dbl> <chr>

1 1 oh snap there's hot gear 0.64 5.12 dY818rfsJkk

2 2 merch method dot com slash tom sagura 3.04 5.84 dY818rfsJkk

3 3 check out all our new stuff 5.76 3.12 dY818rfsJkk

4 4 these club owners will take advantage as 9.92 3.04 dY818rfsJkk

5 5 you know 12.2 2.40 dY818rfsJkk

6 6 if you don't stand your ground i don't 13.0 2.88 dY818rfsJkkUpon inspection, the YouTube API call gives us 5 columns and just over 4500 rows or data. ‘Segment_id’ would be our unique identifier for each segment, ‘text’ is obviously the speech-to-text captions we are after, ‘start’ appears to be the time stamp when the text is recorded, the value of ‘duration’ is a mystery at the moment but we’ll look into that later, and ‘vid’ is simply the YouTube HTML video id (the characters after “watch?v=” in every YouTube video address).

The next step would be to download the transcripts for a number of episodes, but so far we’ve only made an API call to YouTube using the exact URL for a specific video. How are we going to call a list of videos outside of manually looking up all 571 YMH videos one at a time? Eric figured out a nice solution on his blog and we’ll explore it next.

Generate YMH URL list

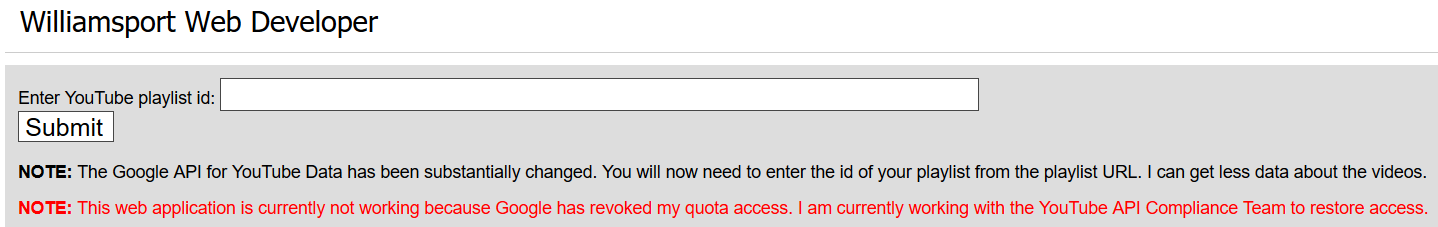

What we can do to script out a large API call is take a YMH YouTube playlist and export that to a text file. From there, we have a full list of YMH videos, their names, and, most importantly, the URLs. There are plenty of web tools and extensions to export a YouTUbe data to a .csv and only require a quick Google search to find one. Eric used this tool which looks straight forward and conveinent. Once you have the list, we’ll need to clean and arrange the file to use when calling from the YouTube API.

Houston, we have a problem:

Turns out the web app Eric used to gather the YouTube playlist and episode information is no longer working. We will have to find other means of generating this list to make the API call. After a painstaking Sunday searching for alternative web apps and testing them, I was not able to find anything that fit the needs for this project. That is until I stumbled upon YouTube-dl, a command-line program created to download YouTube videos and/or audio tracks from YouTube and a host of other sites. Turns out this program can also be used to scrape the YouTube metadata of videos. It took some time (and reddit/r/youtube-dl threads) to figure out how to use the tool to generate a list of video titles and URLs from a playlist, but I come to you with the knowledge! So lets do this, shall we?

First thing you’ll want to do is download the youtube-dl .exe windows application from their site and place it into a folder. Open a cmd window and set that folder as your directory directory. Lucky for you, I’ve done all the troubleshooting and testing of this tool. These are the commands I decided to use, mainly due to my limited knowledge of the windows command line.

This command will result in a long csv file, with the episode title and url id all in one column:

youtube-dl.exe --flat-playlist --get-title --ignore-config --get-id -o

"https://www.youtube.com/watch?v=%(id)s" "https://www.youtube.com/playlist?list=PL-i3EV1v5hLd9H1p2wT5ZD8alEY0EmxYD",

>ymh_playlist.csvInstead of dealing with the above output, I decided to just export two files, one with the playlist video titles and another with the playlist video url id’s and simply join them in R:

youtube-dl.exe --flat-playlist --get-title --ignore-config -o

"https://www.youtube.com/watch?v=%(id)s" "https://www.youtube.com/playlist?list=PL-i3EV1v5hLd9H1p2wT5ZD8alEY0EmxYD",

>ymh_title.csv

youtube-dl.exe --flat-playlist --get-id --ignore-config -o

"https://www.youtube.com/watch?v=%(id)s" "https://www.youtube.com/playlist?list=PL-i3EV1v5hLd9H1p2wT5ZD8alEY0EmxYD",

>ymh_id.csvYou should end up with either one or two .csv’s with a single column of data, depending on your preferred method. Let’s take a look at them:

playlist_title <- read.csv("~/GitHub/blog_website_2.0/blog_data/ymh_title.csv",

header=FALSE)

playlist_url <- read.csv("~/GitHub/blog_website_2.0/blog_data/ymh_id.csv",

header=FALSE)

head(playlist_title)

V1

1 Your Mom's House Podcast - Ep. 572 w/ Chris Distefano

2 Your Mom's House Podcast - Ep. 571 w/ Marcus King

3 Your Mom's House Podcast - Ep. 570 w/ Ian Bagg

4 Your Mom's House Podcast - Ep. 569 w/ Duncan Trussell

5 Your Mom's House Podcast - Ep. 568 w/ Kevin Nealon

6 Your Mom's House Podcast - Ep. 567 w/ Fortune Feimsterhead(playlist_url)

V1

1 wTvMDkAZZnM

2 dY818rfsJkk

3 _VCG6PzLnRA

4 J4hQnfu9nkY

5 bGklkg3m-6U

6 zSRBGlyisnoData Cleaning

We’ll do some reshaping and data cleaning next. Now I’m not the best person to explain data cleaning steps and I know there are probably simpler, faster, and more efficient ways of doing it but these worked for me. I’d suggest searching for some of the experts on {tidyverse} and {reshape2} to learn about it!

append_rows <- which(!(grepl("Your", playlist_title$V1)))

title_rows <- c(20, 20, 86, 98, 250, 251)

playlist_title[paste(title_rows, sep = ","),] <- paste(playlist_title[paste(

title_rows, sep = ","),], ",",playlist_title[paste(

append_rows, sep = ","),])

playlist_title <- playlist_title %>% filter((grepl("Your", V1))) %>%

rename("title" = V1)

playlist_url <- playlist_url %>% slice(1:245) %>% transmute(

url = paste("https://www.youtube.com/watch?v=", V1, sep = ""))

playlist_clean <- cbind(playlist_title, playlist_url)

playlist_clean <- playlist_clean %>%

clean_names() %>%

## systematically cleans any text or symbols up

separate(title, c("title", "episode"), "-") %>%

## separate episode title by '-'

mutate(vid = str_replace_all(url, ".*=(.*)$", "\\1"))

## creates a vid column of just the youtube short link, this column matches

## the "vid" output from get_caption() and will be used to join the two tables

glimpse(playlist_clean)

Rows: 245

Columns: 4

$ title <chr> "Your Mom's House Podcast ", "Your Mom's House Podcast ", "...

$ episode <chr> " Ep. 572 w/ Chris Distefano", " Ep. 571 w/ Marcus King", "...

$ url <chr> "https://www.youtube.com/watch?v=wTvMDkAZZnM", "https://www...

$ vid <chr> "wTvMDkAZZnM", "dY818rfsJkk", "_VCG6PzLnRA", "J4hQnfu9nkY",...Success! We have 245 rows of observations of YMH episodes, #330 to #572, plus some extra/live episodes with titles and URLs. You’ll notice I tweaked some rows and cut off some rows in the cleaning steps. If you interrogate the data more, you’ll find that either the youtube-dl tool or the command line does not export data into a .csv. cleanly. I found some of the long YouTube titles ended up having part of the title placed on a second row. Also, the last 2 videos in the playlist are marked as deleted and/or private so I removed those as well.

Now we can start working on pulling the transcripts for the 245 episodes we’ve generated.

List API call

The next steps is to again use the get_caption() function again but this time we need the function to pull all the captions from the list of URLs. We also want to make sure the get_caption() function successfully runs through the list and alerts us to any video it cannot successfully call. The way Eric did this was to wrap the get_caption() function inside the safely() function from {purrr}.

# ?safely to read up

safe_cap <- safely(get_caption)

Based on the help documents, safely() provides an enhanced output of errors, messages, and warnings outside of the base r reporting. This function should help determine if our large API call has an errors while running.

Within the same {purrr} library, the map() function applies a function to each element of a list. Therefore, in out case, map() will apply the get_caption() function to each URL in the playlist and, by wrapping safely() around get_caption(), will provide enhanced outputs and errors.

playlist_call <- map(playlist_clean$url,

safe_cap)

## warning - large print out:

## glimpse(playlist_call)

Finalizing the API call output

With the list API call finished, the return of the map() function resulted in a vector of the 245 captions. But instead of a single output, we have a result output and an error output from the safely() function and we want to get the ‘result’ outputs from each vector. (By the way, feel free to check those safely() error messages before continuing to see if you need to traceback anything).

To do this, we will again call the map() function as well as the pluck() function from {purrr} (you got to love these function names from a library called {purrr} :D). Pluck() essentially indexes a data structure and returns the desired object, in our case the resulting caption text from each URL.

playlist_data <- map(1:length(playlist_call),

~pluck(playlist_call, ., "result")) %>%

compact() %>%

bind_rows() %>%

inner_join(x = playlist_clean,

y = .,

by = "vid")

glimpse(playlist_data)

Rows: 527,189

Columns: 8

$ title <chr> "Your Mom's House Podcast ", "Your Mom's House Podcast "...

$ episode <chr> " Ep. 572 w/ Chris Distefano", " Ep. 572 w/ Chris Distef...

$ url <chr> "https://www.youtube.com/watch?v=wTvMDkAZZnM", "https://...

$ vid <chr> "wTvMDkAZZnM", "wTvMDkAZZnM", "wTvMDkAZZnM", "wTvMDkAZZn...

$ segment_id <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 1...

$ text <chr> "i'm not chrissy drip drop anymore you're", "chrissy fiv...

$ start <dbl> 0.160, 1.599, 3.679, 5.279, 6.160, 8.080, 9.280, 11.679,...

$ duration <dbl> 3.519, 3.680, 2.481, 2.801, 3.120, 3.599, 5.600, 8.641, ...Success! We’ve ended up with a table of 8 variables and over 500,000 segments of caption text. This is a good place to stop for now and we’ll pick back up on part 2 with the text analysis.

Thanks again to Eric Ekholm, blog post, who laid the foundation for me to learn this youtube api call process!